Recap of the StarRocks 3.0 Community Call - Feature Highlights and Q&A

The StarRocks open source community recently came together for an insightful community call led by Albert Wong, Developer Advocate, and Sida Shen, Product Marketing Manager. The call covered the latest product updates in StarRocks 3.0, ecosystem integrations, community surveys, roadmap plans, and more. Read on for some of the top takeaways from the hour-long discussion.

Link to the full recording here.

Overview of StarRocks 3.0 Capabilities:

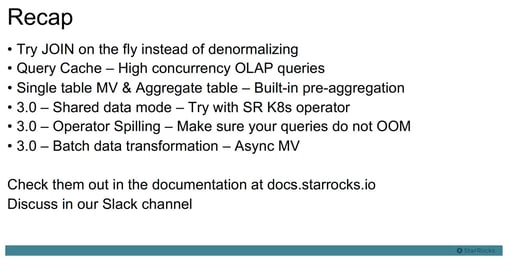

The call highlighted several powerful new features and capabilities released in StarRocks 3.0 that unlock new analytics use cases:

-

Shared Data Model: This allows separating storage and computing, with data persisted in external object stores like S3 instead of directly on cluster nodes. The immediate benefit is the ability to dynamically scale or terminate nodes without data loss, improving elasticity and reducing costs, especially in cloud deployments. Local SSDs are purely used for caching and temporary spill files rather than storage.

-

Asynchronous Materialized Views: This enables more complex data transformations beyond just aggregation by supporting general SELECT queries. Materialized views can join data across tables and even different external sources like Hive. This is great for pre-aggregations and ELT.

-

Operator Spilling: By spilling intermediate query data to disk, StarRocks can now complete complex queries without running out of memory. This ensures analytical workloads can run to completion even on smaller instances. Spilling will continue to be optimized but already unblocks use cases previously not possible.

These capabilities unlock new real-time and high-concurrency analytics on live, heterogeneous data sources for StarRocks users. Everyone is encouraged to test drive 3.0 and provide feedback to the team.

Features You Should Be Taking Advantage Of

In addition to the major features highlighted above, the call also showcased some other powerful capabilities available in StarRocks that were released recently (V2.4, 2.5):

-

Query Cache: Provides acceleration even if SQL and data are not identical between queries by caching common intermediate computation.

-

Real-Time Pre-Aggregation: Synchronous materialized views and aggregated tables allow pre-computing and storing of aggregations that are automatically updated in real-time during ingestion. This is useful for extremely high query concurrency real-time workloads.

These features expand the types of workloads and use cases StarRocks can support, especially involving high concurrency queries for real-time analytics applications. Try them for yourself!

Expanding the StarRocks Ecosystem

A key focus highlighted was expanding integrations with other technologies to enable more diverse end-to-end use cases. Some new connectors and integrations mentioned:

-

Apache Flink Connector (published) - Enables piping data between Flink jobs and StarRocks clusters.

-

Kubernetes Operator and Kubernetes Helm Chart (published) - Enables the installation and uninstall of StarRocks containers.

-

Kafka Sink Connector (beta) - Allows streaming data from StarRocks into Kafka topics, setting up integration with DbStream's CDC pipelines.

-

AirByte Destination Connector (beta) - Allows one time batch and delta batch import data from another software to StarRocks using AirByte.

-

Improved Support for DBT - Prioritized for the next few months

-

Superset Dialect and Driver (available in recent Superset releases) - Enables direct connections to StarRocks from the popular BI tool.

-

Apache Iceberg - Getting StarRocks to work with Apache Iceberg's quickstart.

-

Apache Hudi - Getting StarRocks to work with Apache Hudi's quickstart.

Community Feedback to Guide Roadmap

The Community Survey for June was announced to gather detailed feedback.

The feedback will provide crucial insights to help guide StarRocks roadmap priorities in upcoming releases. Community members are encouraged to participate and provide their perspectives.

Addressing Key Questions from Participants:

Many questions were raised by the audience, too many to be listed here. Here are some of the most popular questions:

Q: If we have data already in disk could we move to S3 in a painless way?

A: Unfortunately, there is no direct migration path from on-disk storage to S3 with the shared data model. Data would need to be re-ingested into a separate StarRocks cluster configured for S3.

Q: How large do we need to set BE local disk for shared_data mode?

A: More local disk improves performance. Customize based on working set size and performance needs.

Q: Do we have query response time benchmarks between 2.x and 3.x shared?

A: Internal tests showed comparable performance between 2.x and 3.x shared_data mode when cache hit. Without cache, shared_data mode on StarRocks' format is also comparable to querying external data sources on open formats like Parquet, and ORC on open lake formats.

Q: In the situation where local disk size is less than total size, can we configure the cache such that the most recent data is accessed from local disk but for older data SR will fetch from S3?

A: Yes, cache policies favor recent data, so newer data stays in cache while older data gets evicted to S3.

Q: Can the materialized view table have a longer data retention rule than the raw table on Iceberg?

A: This capability does not exist yet, but is a good feature request for the future.

Q: Why do you suggest we try shared data mode with k8s operator? Can we use it in EC2 deployment mode?

A: It works in VMs (i.e. AWS EC2s) as well. Kubernetes helps with elasticity and scaling.

Q: While ingesting from Kafka, can we configure storing the latest data (like 7 days) in cache and have older data move to cloud storage automatically?

A: Yes, TTL policies can automatically expire older Kafka data from the cache.

Q: Will the operator spilling use the same local disk cache folder as the data cache in BE? Or can we configure it to use a separate folder?

A: Spilling can be configured to use a separate high IOPS disk for best performance.

Q: Can we use Debezium connector to ingest data into StarRocks?

A: Not yet directly, but the Kafka connector (in beta) will enable a Debezium integration.

Closing Thoughts

StarRocks continues to evolve rapidly thanks to its open-source community.

Join our next webinar and contribute ideas, suggestions, and feedback.

Here's a snapshot of what you'll gain in our next webinar:

-

Uncover successful strategies through use cases from StarRocks users.

-

Understand potential challenges when implementing user-facing analytics and learn how to sidestep them.

-

The inside scoop on why StarRocks should be your go-to solution for real-time, high-concurrency user-facing analytics.